Google has unveiled a new artificial intelligence model called Lumiere that can generate high-quality videos from text prompts.

According to Google, Lumiere can portray realistic, diverse and coherent motion in videos, which is something it says is a pivotal challenge in video synthesis. Lumiere uses a diffusion model called Space-Time-U-Net, or STUNet, that can create both the spatial and temporal aspects of video generation. Unlike other models that create videos by stitching together individual frames, Lumiere can produce videos in a single process, resulting in smoother and more natural motion.

The model essentially creates a base frame from the text prompt, and predicts how the object will move in subsequent frames. Lumiere can generate up to 80 frames per second, compared to 25 frames per second from Stable Video Diffusion.

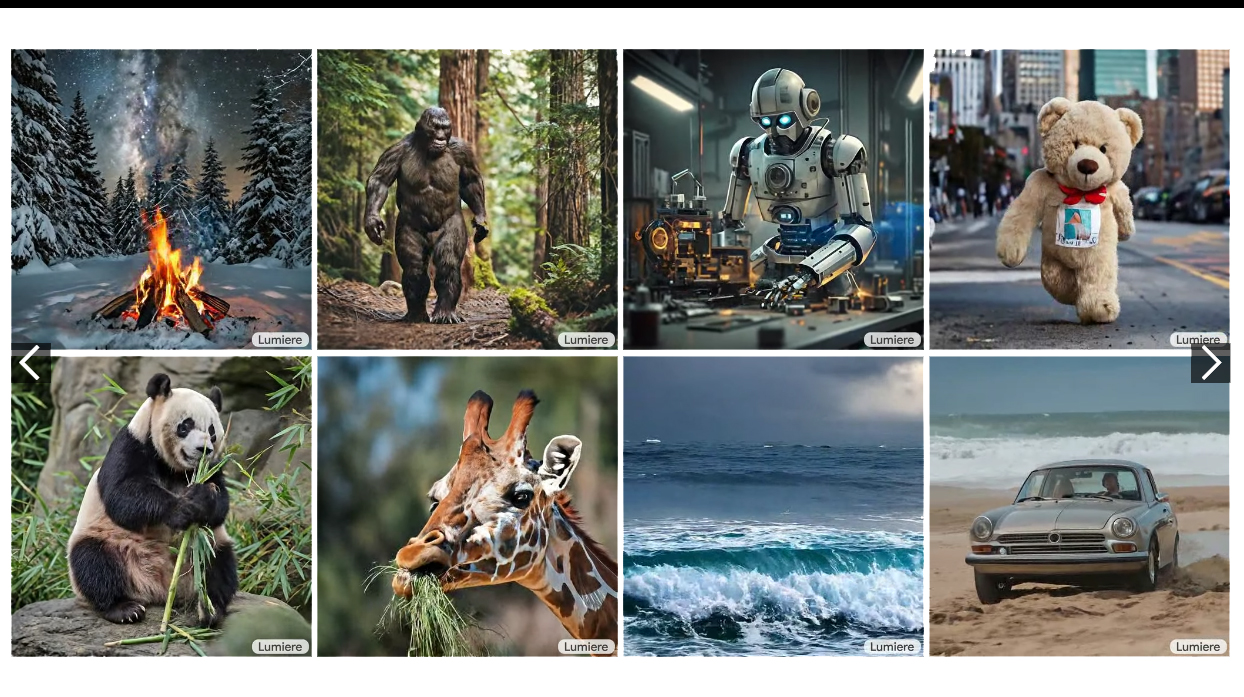

The Lumiere website shows several tiles of the model in action, and you can check it out here. Google also shared examples of image-to-video generation.

It’s worth noting that Lumiere is not yet available for public testing. However, it does showcase Google’s potential to create an AI video platform that can rival the existing ones. You can learn more about it here.

Source: Google Lumiere Via: ArsTechnica

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.