A week after rebranding Bard to Gemini and finally launching the AI tool in Canada, Google has announced the release of Gemini 1.5, a new version of its AI model that boasts “dramatic improvements” compared to its predecessor, Gemini 1.0.

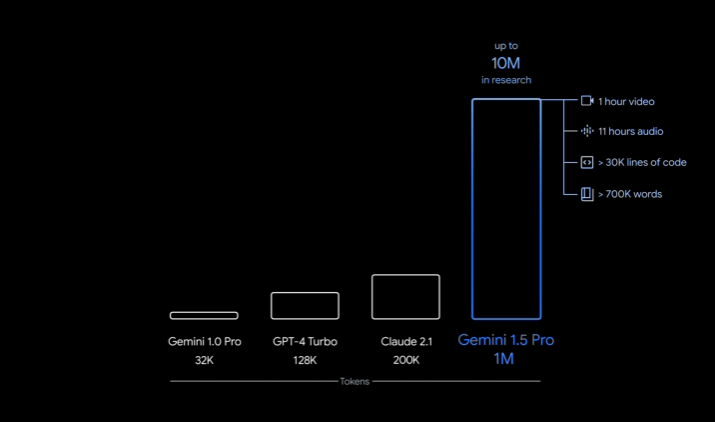

The primary benefit of the new model is that it can process significantly more information than Gemini 1.0. According to Google, the new model can run up to 1 million tokens consistently, “achieving the longest context window of any large-scale foundation model yet.”

“Longer context windows show us the promise of what is possible. They will enable entirely new capabilities and help developers build much more useful models and applications,” said Google CEO Sundar Pichai.

Google is first releasing Gemini 1.5 Pro for early testing. It comes with a standard 128,000-token context window. However, a limited group of developers and enterprise customers will be able to try out the model with a context window of up to 1 million tokens via AI Studio and Vertex AI in a private preview. This allows the model to process 700,000-word inputs at once. For reference, the original Gemini 1.0 Pro’s context window could only process 32,000 tokens of information at once.

Google says that its development in model architecture allows Gemini 1.5 to learn more challenging and complex tasks more quickly than regular models. In a shared example, Google gave the model a 402-page audio transcript from Apollo 11’s mission to the moon. The model could analyze and summarize the extensive document and reason about conversations, events and details across the transcript.

Check out the video below to see the model in action:

The model is also better at solving problems and explaining code across longer blocks of code, and can process over 100,000 lines of code at once.

“When tested on a comprehensive panel of text, code, image, audio and video evaluations, 1.5 Pro outperforms 1.0 Pro on 87% of the benchmarks used for developing our large language models (LLMs). And when compared to 1.0 Ultra on the same benchmarks, it performs at a broadly similar level,” wrote Google.

Google is offering a limited preview of Gemini 1.5 Pro to developers and enterprise customers. Further, it will introduce 1.5 Pro with a standard 128,000 token context window when the model is ready for a wider release.

Read more about the new model and its rollout here.

Image credit: Google

Source: Google

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.