Since launching its streaming service in 2007, Netflix has become a ubiquitous form of entertainment, operating in over 190 countries around the world.

Because of that, some people may take the ability to stream thousands of TV series and movies on demand for granted, paying no mind to the assuredly massive technical demands associated with operating the platform.

To demonstrate this, Netflix invited journalists to take a tour through its Hollywood and Los Gatos offices in California. There, members of the press were able to learn about some of the sophisticated technology and work that goes into running one of the world’s largest digital media and entertainment company.

Here’s what MobileSyrup took away from the event.

Iterative infrastructure

“The internet keeps evolving, Netflix keeps evolving, what people want out a service like Netflix keeps evolving, so it’s a constant series of different venues of invention in trying to make the service better and better in different environments around the world,” said Netflix vice president of content delivery Ken Florance during a presentation.

According to Florance, Netflix users around the world watch more than 140 million hours of content every single day. That number is only an average, with some days seeing much higher traffic volumes. January 7th, 2018 marked the company’s highest-ever day for streaming, Florance said, with 350 million hours of content watched on that day alone. To put that in perspective, that’s 40,309 years worth of global viewing packed into a single day.

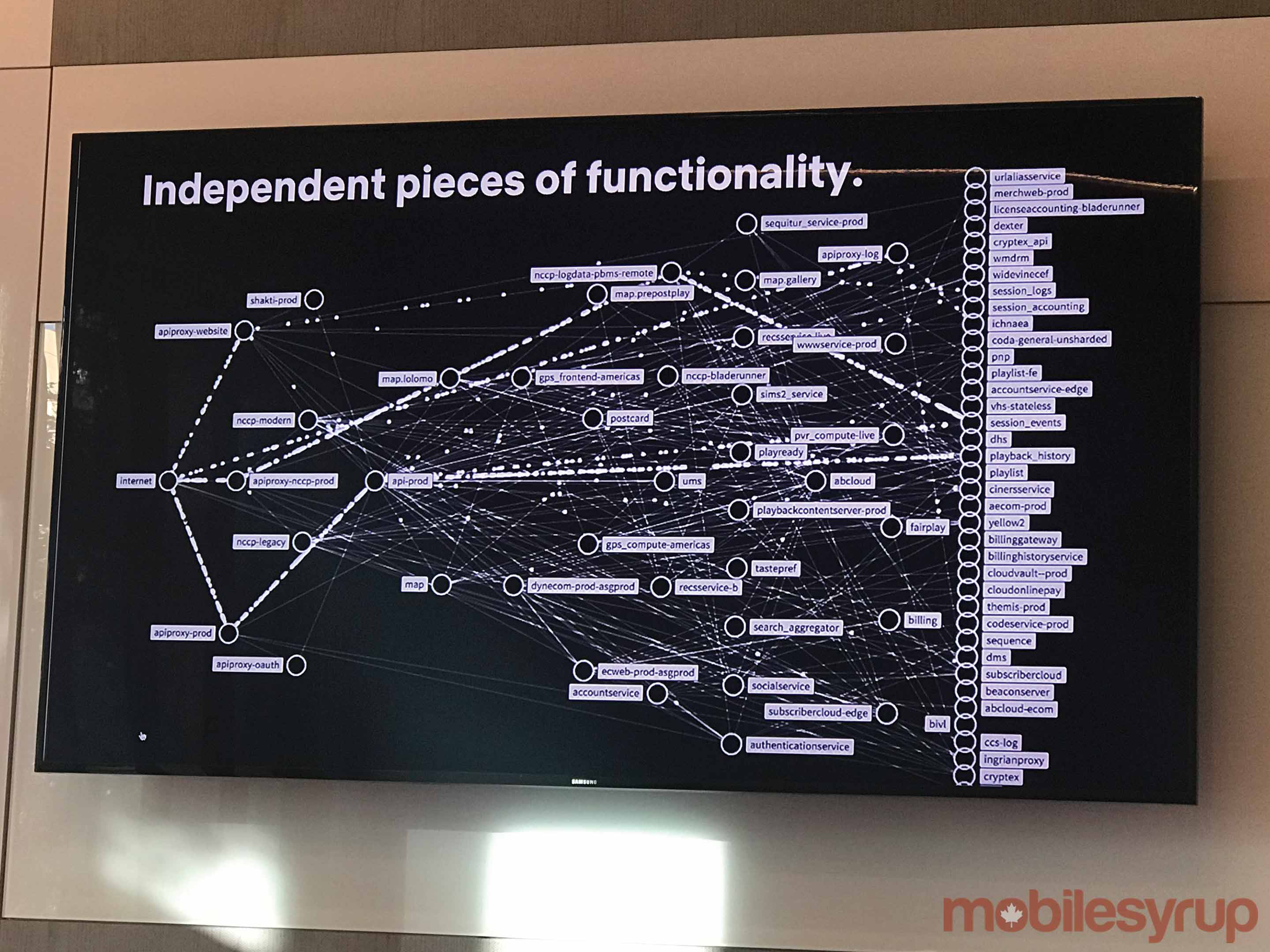

To keep up with that kind of ever-increasing demand, Florence said Netflix utilizes robust streaming infrastructure comprised of two primary components. The first element is the control system, which Florance said manages all of the ways users interact with Netflix. On a technical level, this system is responsible for how users login to Netflix, how recommendations are determined and offered to them, where users pause and resume playback and more. The control system also retains global knowledge of internet routing systems and runs off of Amazon Web Services (AWS) cloud technology.

The second component of the control system is Netflix’s custom-built content delivery network (CDN), which handles the actual streaming of audio and visual data to devices. Netflix’s CDN is powered by OpenConnect, multiple servers connected around the world that help guide users’ streams to the best network for them. Florance said these servers come in two forms: storage servers (such as hard drives and spinning disks) which can each store 250TB with a bitrate of about 20-40Gbps — and flash servers, which are used for the most popular content and boast a bitrate of up to 100 Gbps. Netflix engineers typically work on adding content to these servers overnight or at times when app traffic is slower, in order to avoid potentially disrupting users at peak traffic times.

According to Florance, any content that Netflix knows will be among its hottest titles (like the recently launched second season of Marvel’s Jessica Jones) will be loaded onto practically every server on its network. Being able to pre-position content in such a way gives Netflix an advantage that social media like Facebook or YouTube don’t have, Florance said. He noted this is because content on those platforms is user-generated and, therefore, cannot fully be prepared for in the way the launch of all thirteen new episodes of Jessica Jones can be.

On the other hand, it can sometimes be difficult to maintain quality streaming in real-time, Florance said. “We’re streaming data in from the network and you’re playing it out to your screen, whether that screen is a TV or a phone or whatever,” Florance explained. “Our job is to get the data across the network faster than it’s playing out to your screen.”

To do that, Netflix uses an adaptive streaming engine to monitor and manage data coming in from the network — a system that Florance likens to preventing a bucket from leaking water. “You’ve got a bucket that you’re filling with water from a hose, which represents the data coming in from the network,” he said. “It’s draining out, which represents what you’re watching on the screen. And our job is not to let that bucket empty out, basically, which is equivalent to buffering on your device.”

Enter the (encoding) matrix

Netflix’s success in stopping the proverbial bucket from spilling out is largely dependent on the work of the video algorithms, led by director Anne Aaron. According to Aaron, her group is responsible for compressing video files to ensure users experience seamless playback at the highest quality. For example, she mentioned that the final episode of season two of Jessica Jones, titled “Playland,’ was delivered to her team in 293GB of raw video. Such a high amount of raw video would not fit in the OpenConnect boxes, Aaron said, so her team had to then try to compress the video hundreds — or in other cases, even tens of thousands — of times.

This process is particularly essential for Netflix users who may experience low-bandwidth connections, unreliable networks or fixed data plans. As an example, Aaron pointed to her home country of the Philippines, where some of the more expensive service plans offer 4GB of data — an amount that initially might not offer much opportunity to consume content.

“If we were just using standard algorithms and not trying to optimize our encoding, and sent the video at 750Mbps, this would amount to only 10 hours of streaming. [With that], you couldn’t even watch an entire season of one show.” After optimizing the encoding, Aaron said someone in the Philippines could benefit from at least 26 hours of streaming on that same data plan.

To get to that point, though, Netflix had to go through several different iterations of the encoding process. First, in 2015, Aaron said her team realized that not every title in Netflix’s catalogue required the same amount of encoding work, even though they were all encoded through the exact same process. For example, she stated that Bojack Horseman (with its animation style and scenes mainly featuring characters standing around and talking) didn’t need to be encoded to the level that something like Jessica Jones (a live-action drama with a lot of motion) would call for.

After that discovery, the team switched to encoding on a per-title basis, Aaron said, although improvements didn’t stop there. “The complexity or the type of content that one show or one movie has could [sometimes] be changing,” she said. “So if we’re optimizing per title then this still isn’t being efficient.” She mentioned that although animation titles like Bojack Horseman or Barbie: Life in the Dreamhouse required fewer bits in general, there were select episodes where animation may be more complex and therefore more difficult to encode.

In response, Aaron’s team updated its encoding algorithms to say “let’s optimize the encoding on a per chunk basis,” she said, which first took effect in 2016. She pointed out that this improvement reduced file sizes while leaving video quality intact, which helped in the download feature that launched in November 2016.

“But of course, we didn’t stop attacking the problem, just like Jessica Jones would,” Aaron said with a laugh. It’s most recent update to the encoding process is the addition of what Netflix calls a ‘dynamic optimizer encoding framework.’ She said the most natural way to segment a video for encoding is by shot, a sequence of frames in a video that are very similar to each another or have very uniform quality, such as a few characters seen through the same lighting in the same background.

“So we segment the video into shots, we analyze the content per shot and we make encoding decisions based on that shot, as well as other shots in the video, so that we can allocate the bits optimally with the overall goal of maintaining the best quality possible with the least amount of bits,” she explained. In learning over time about the intricacies of encoding for a massive streaming service, Aaron said her team has been able to find the most efficient method to date.

To deploy the latest system, Netflix adopted an automated metric that could maintain a standard of video quality. This was achieved by performing subjective tests with countless people to judge what made up optimal video playback. Netflix then used this data to train machine learning algorithm to determine how a human would perceive video and evaluate it accordingly. As well, Aaron said Netflix adopted the latest video formats and conducted extensive research into next-generation codex technology.

Through all these efforts, Aaron said Netflix has demonstrated marked improvements in streaming. For example, she said that in video that was at 70 percent picture quality — above-average but imperfect — this would previously have been encoded at 750Kbps. Now, the same quality can achieved at 270Kbps, marking a 74 percent decrease.

Aaron said over the past year, Netflix has painstakingly re-encoded its entire library using the improved algorithm. So far, the updated catalogue is available on Netflix on Android, iOS, PlayStation 4 and Xbox One, while beta tests are currently ongoing for browsers and TV versions of the streaming service.

Testing, testing, testing

All of the servers and encoding technology in the world would mean nothing without platforms to run on. Currently, Netflix is available on over 1,700 models of devices around the world, ranging from different TVs, computers, phones, tablets and more. Because of this, the company needs to continuously conduct tests on each supported device in order to maintain quality streaming all across the board. To help with this process, Netflix uses what it calls a mobile automation lab.

At first glance, the lab seems rather unassuming, with many cables, some computers and a handful of Netflix Originals-themed lockers. However, stored in this room is a complex series of Wi-Fi networks that simulate connection speeds of varying levels of strength to emulate how a user’s experience may be affected by different circumstances, such as a location where signals are weaker. According to Scott Ryder, Netflix’s director of mobile streaming, the company has also managed to create and store six miniature cell towers within the lab to test cellular connections in a similar capacity.

The lockers, meanwhile, are used to house devices like phones and tablets that support Netflix. There, devices are connected to an automated testing system that enables Netflix to police them every few hours, as well as send data to the developers to monitor both positive and negative changes.

Ryder said that each day, Netflix runs at least 135,000 tests in this lab which produce reports containing several terabytes worth of data for developers. In its Los Gatos headquarters, Netflix aims to have at least one model of every device it runs on to use in these tests, even those that may not be as commonly used anymore. For example, at one point during a presentation, a pair of Netflix developers passing by mentioned they were going to grab an Xbox 360 and Wii U from storage — two older-generation consoles that are now discontinued. The lab’s automated system is also updated weekly to ensure stability and security, Ryder said.

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.