People have praised Google’s Pixel line of phones for its superior camera tech. The Pixel 3 and 3 XL bring even more enhancements, Super Res Zoom being one of the more interesting ones.

When Google first announced the feature, it said the camera would rely on the movement of your hands to make zoomed photographs look better. While that idea seems counterintuitive — how can my shaky hands make my photo better? — the results of Super Res Zoom speak for themselves.

Pixel 2 vs Pixel 3 at 2x zoom

The search giant claims that 2x zoom using its digital system is comparable to the 2x optical lens systems used on competing phones. Impressively, the claim is accurate too.

Thankfully the company has posted an in-depth breakdown of how Super Res Zoom works on its AI blog so we can see how it works.

To understand Super Res Zoom, you need to understand demosaicing

Demosaicing is a process that fills in colour information that’s missing from an image. Typical consumer and smartphone cameras use sensors designed to measure the intensity of light, not its colour.

To capture colour, cameras use a colour filter array placed in front of the sensor. This array makes each pixel capture only one colour (red, green or blue). Cameras usually use a Bayer pattern (shown above) for the array.

Demosaicing makes a best guess at the missing colour information based on the colour information from surrounding pixels. According to Google, about two-thirds of an RGB digital picture is a reconstruction.

Using the Bayer array to your advantage

Part of the reason that digital zoom doesn’t look great is this demosaicing feature. Digital zoom essentially crops the picture and relies on the demosaicing process to fill in more colour information based on less surrounding information from the sensor.

However, by combining burst photography with hand motion, Google created a system that can gather more colour information.

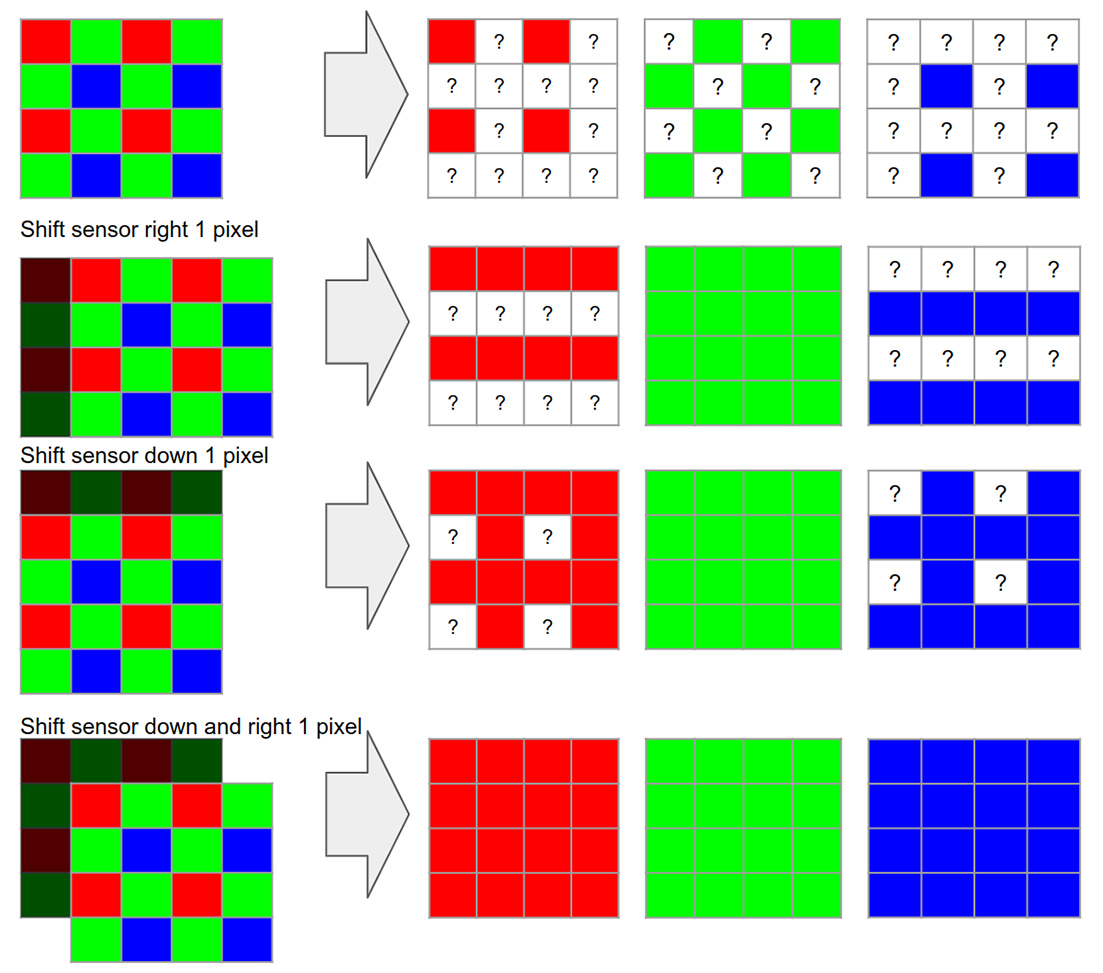

Essentially, the system merges a burst of low-resolution images onto a high-resolution grid. You can see a diagram above that shows the idealized algorithmic process.

In the diagram, the camera captures four frames, three of which are moved precisely one pixel horizontally, vertically and both directions.

This fills in all the ‘holes’ in the Bayer array, reducing or eliminating the need for demosaicing.

Interestingly, the Pixel 3 will jiggle its sensor if there isn’t enough movement for Super Res Zoom. You can see this in effect if you stabilize the phone and go to the maximum 7x zoom.

If Super Res Zoom doesn’t detect enough hand shake to collect data to create a zoomed image, it will move the lens on its own to gather more data. Look at it wiggle.

(gif by @billiamjoel ) pic.twitter.com/ZwWfcTzZfA

— Dieter Bohn (@backlon) October 14, 2018

Like everything, it isn’t that simple

Unfortunately, there were a lot of hurdles that Google had to leap to make Super Res Zoom work.

For one, things tend to move around, so the algorithm also has to contend with motion outside of the camera. Movement can also increase information density in one area of the photo. Since Super Res Zoom relies on interpolating data to fill in the blanks, too much information in one spot can mess with that process.

Additionally, Google says that a single image from a burst photograph can be noisy, even in good lighting conditions. The algorithm had to work correctly despite the noise.

Google has solutions

Google managed to solve some of these problems with some smart work in the algorithm.

One example is that Google’s algorithm uses edge detection when merging images. When the algorithm blends pixels, it does so along an edge instead of across it. This allows Google to strike a balance between noise reduction and enhanced details.

Without robustness model (left) vs. with robustness model (right)

Furthermore, the company developed a ‘robustness’ model to detect and mitigate alignment errors when merging images. The model will only merge information into other frames if it’s sure it’s found the correct place to merge it. Thanks to this model, Super Res Zoom photos don’t have artifacts like ‘ghosting’ and motion blur.

Overall, it’s a very robust system that churns out surprisingly good results. Google’s Pixel 3 solves the difficulty of bad digital zoom.

If you want to learn more about the process, you can check out the Google blog here. Also, the company posted a Google Photos album featuring many uncropped Super Res Zoom images. They are seriously impressive (see an example below).

From top to bottom, you have no zoom, 2.37x zoom and 3x zoom on Pixel 3 XL

Source: Google Via: Android Police

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.