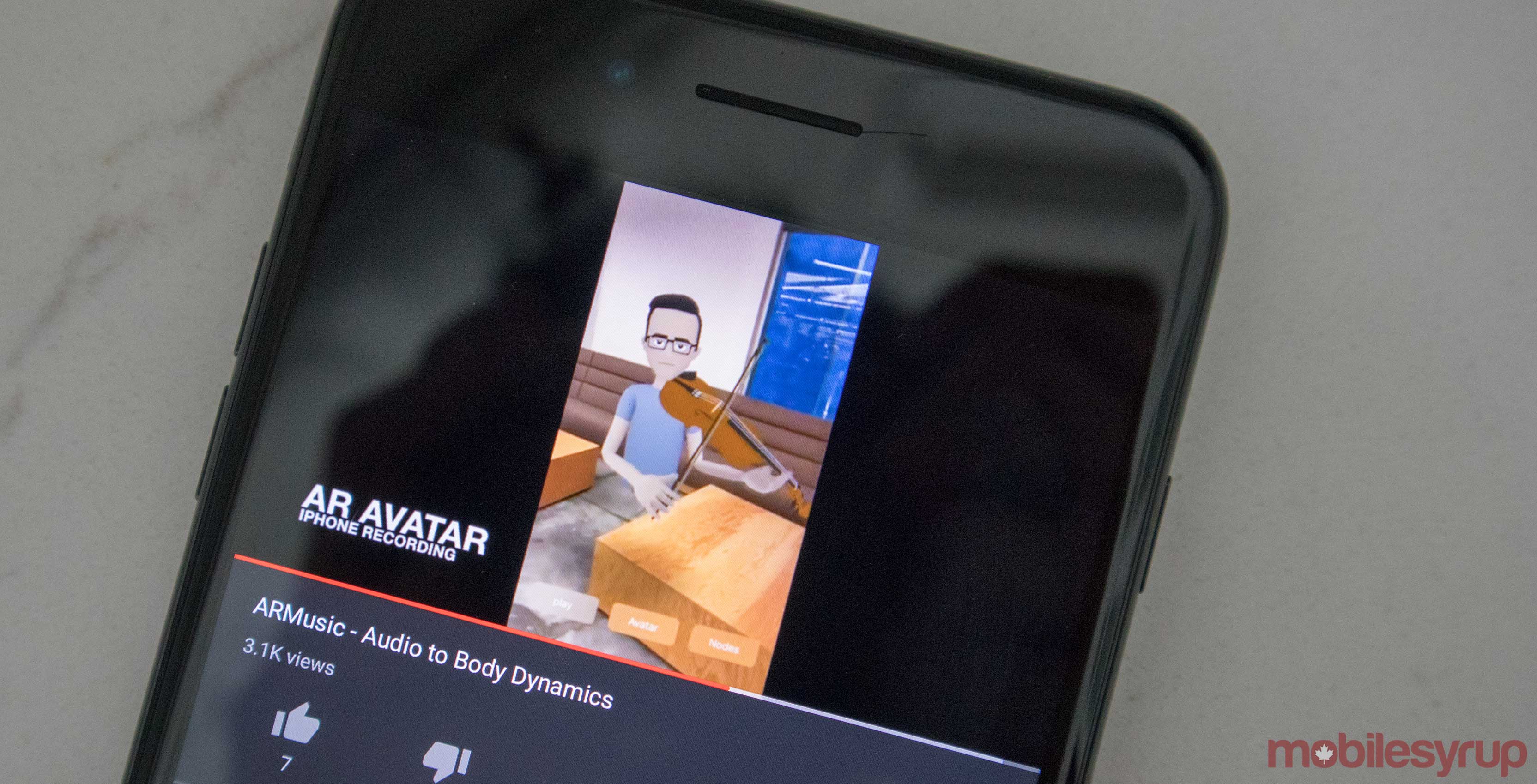

Facebook has revealed an augmented reality research project in which automated avatars mimic a musician’s movements.

The company teamed up with Stanford and the University of Washington on the project, which is called ‘Audio to Body Dynamics.’ The project was revealed at the Computer Vision and Pattern Recognition conference in Salt Lake City.

The team started this project to see if human movement could be predicted and recreated from music.

The video shows off some of the animations and it looks like the team still has a ways to go since the finger movements aren’t perfect yet.

The team created a long short-term memory (LSTM) network that was trained on piano and violin videos on the internet. An LSTM network is part of a neural network and is involved with helping the program remember.

The team stated that they’re trying to get closer to reproducing the exact finger movements of a real musician, but this is all they have so far.

This tech could have a lot of other uses in the future, specifically in video games where developers could use similar technologies to make non-playable characters move more realistically.

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.