Facebook has announced that it’s reducing the size of posts in the News Feed that have been verified by third-party fact-checkers as inaccurate.

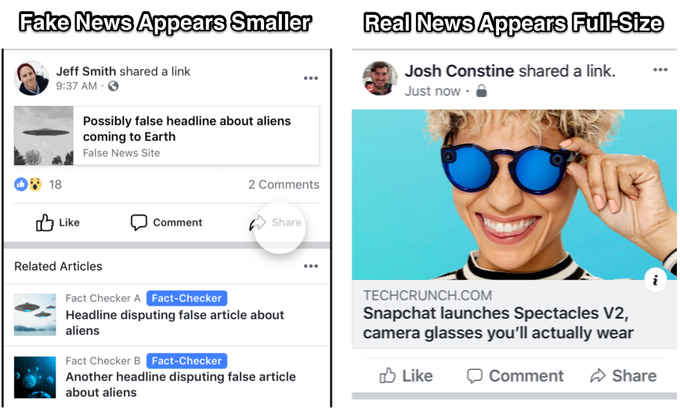

As per images obtained by TechCrunch, stories confirmed to be false on mobile will only show up with their headline and image compressed into a single small row of space. Below these flagged posts will be a ‘Related Articles’ box will refer the user to ‘Fact-Checker’-labeled stories that discredit the original inaccurate story.

Real news articles, meanwhile, will appear roughly 10 times larger, with headlines receiving their own dedicated space.

At the event, Facebook also revealed that it will use a combination of artificial intelligence and flagged user reports to moderate content on the social network.

Using machine learning, Facebook will be able to prioritize articles for fact-checking based on how likely they are to contain fake news.

These initiatives are part of what Facebook calls a larger “ecosystem” approach to combating fake news, which it says includes tackling the issue in the following ways:

Account Creation — Removing accounts from apparent “bad actors” that have used fake identities or networks

Asset Creation — Facebook looks for similarities between created Pages to shut down clusters of them, as well as inhibit any domains they’re connect to

Ad Policies — Pages that exhibit the aforementioned (or other) patterns of bad behaviours will be prohibited from buying or hosting ads

False Content Creation — Facebook will use machine learning to identify potential patterns of risk

Distribution — Fact-checkers will assist Facebook in discovering fake news; in response, Facebook will shrink the size of the post, append Related Articles to debunk it and downrank it in News Feed

Altogether, Facebook says it can reduce the spread of a false news story by 80 percent using this ecosystem.

Source: TechCrunch Via: The Verge

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.