Artificial intelligence is the hot new tech kid on the block. It seems every major company wants to offer some kind of AI service. Whether that’s a digital assistant on your phone, software that provides intelligent suggestions to improve your writing or cars that can drive themselves, AI is present.

AI, however, is not universally good. Like any tool, people can misuse AI, but on a scale much greater than what we’re used to. For example, artificial intelligence can have inherent biases, created by the people who work on them. These are typically unintended, but they can exist in a system all the same.

That’s not the only problem with AI applications. How do facial recognition systems take into account privacy? How do AI systems trick people into thinking they can do things they can’t?

These are important questions. Not many people are offering answers, but Microsoft is trying to.

Microsoft’s general manager of AI programs, Tim O’Brien

At the Redmond-based company’s annual Build developer conference in Seattle, Washington, I sat down at a session with Tim O’Brien, Microsoft’s general manager of AI programs. O’Brien spoke to these challenging questions and how the company is working with customers and governments to develop regulations and improve understanding of AI and its potential impact.

On top of that, O’Brien went in depth on Microsoft’s principles surrounding responsible AI. These principles are core to the way Microsoft thinks about AI, creates AI and does business with AI.

Fair and inclusive: AI shouldn’t exclude

Fairness and inclusivity are two of Microsoft’s principles when it comes to AI, but in many ways, they’re fruits of the same tree.

When building AI systems, inclusivity is crucial. Microsoft believes that these systems should empower everyone and engage people, not exclude them.

O’Brien, unsurprisingly, pointed to examples of Microsoft using AI to include people, like how Office apps use machine reading features to help users with visual impairments. Another example would be the company’s extensive work with live transcription features — which appeared throughout many of the keynotes and presentations at Build — where apps like PowerPoint would create captions as presenters spoke so people with hearing impairments could follow along.

In other words, AI can be an incredibly powerful tool for people with disabilities, so those creating AI applications should go beyond making sure systems don’t exclude people by creating intelligent ways to include everyone.

Fairness comes into play here as well, as sometimes it can be challenging to create AI tools for people who are different than the creators. A New York Times piece about an MIT researcher revealed just how prevalent this problem could be.

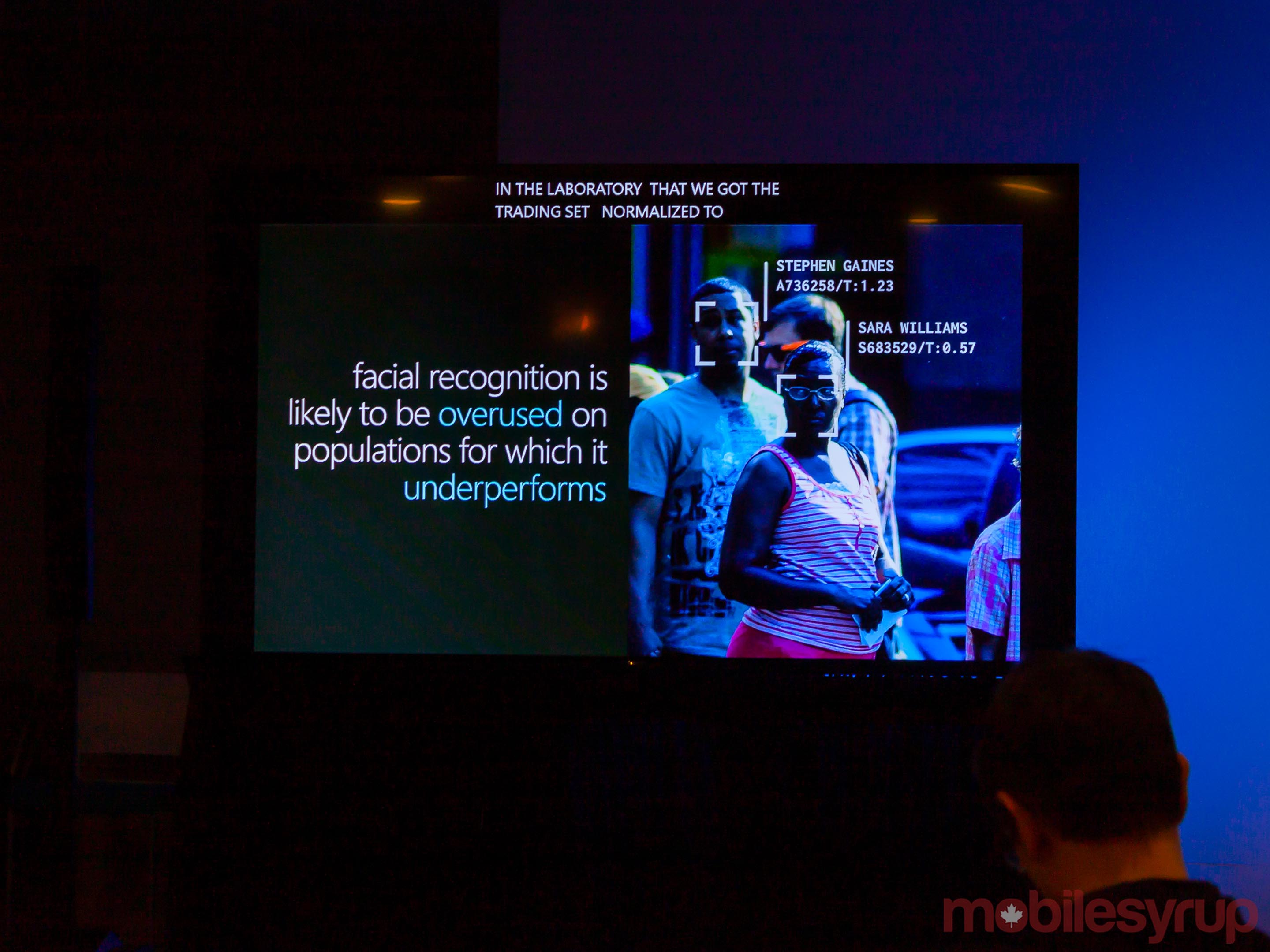

According to the article, the MIT researcher uncovered significant biases in facial recognition software. Essentially, the software was excellent at identifying white males, but errors increased significantly when it had to identify women or people of colour.

The issue, in this case, was weak data. AI systems are only as good as the data they’re trained on, and ingrained human biases can affect the data fed to machine learning algorithms. Reportedly, one widely-used data training set was estimated to be over 75 percent male and more than 80 percent white.

O’Brien took this a step further, noting that facial recognition systems are likely to be overused on populations for which it underperforms. With facial recognition systems — and other AI applications — creators should strive for fairness, primarily because people use tools in ways creators could never imagine.

Reliability and safety: AI shouldn’t cause harm

The next principle that O’Brien discussed was the idea of reliability and safety in AI. Most would agree with the sentiment that AI should never hurt anyone. However, people, not AI, may be the more significant issue.

O’Brien spoke about automation bias, a human knack for favouring automated suggestions and ignoring contradictory information from non-automated sources, even if it’s correct. While this may not seem like a big problem in an intelligent spell check system where the worst case scenario might be a misplaced comma, automation bias can have disastrous consequences in other areas.

Through automation bias, AI systems can give people the illusion that they have permission to do something they shouldn’t.

One example O’Brien provided was a late-2018 case of an intoxicated driver passing out behind the wheel of a Tesla driving through Palo Alto, California. According to reports, the driver allegedly engaged the vehicle’s Autopilot system to take him home. The problem here is that, at the time, Tesla vehicles were capable of Level 3 autonomy, of the five possible autonomy levels.

The issue is that Level 3 autonomy uses the driver as a fallback system. In other words, the car can drive itself, but if anything happens, it will ask the driver to step in. Drivers cannot let the automation go without supervision. It’s worth noting that Tesla vehicles equipped with the company’s Full Self-Driving package should be Level 5 autonomous by the end of 2019.

When u pass out behind the wheel on the Bay Bridge with more than 2x legal alcohol BAC limit and are found by a CHP Motor. Driver explained Tesla had been set on autopilot. He was arrested and charged with suspicion of DUI. Car towed (no it didn’t drive itself to the tow yard). pic.twitter.com/4NSRlOBRBL

— CHP San Francisco (@CHPSanFrancisco) January 19, 2018

Worse, this isn’t the only time drivers used Tesla’s automation systems inappropriately.

The Palo Alto case is another example of automation bias, according to O’Brien. In this case, the person involved felt he had permission to get behind the wheel while intoxicated because the car would do the driving — not him.

AI creators must approach systems responsibility with the knowledge that, through automation bias, those creations may allow people to believe they have permission to do something they can not or should not do.

Privacy and security: AI should ask for consent

The idea that AI systems should be secure and protect users’ privacy is another principle O’Brien discussed. As with other policies, this is a multi-faceted issue.

On the one hand, there’s the issue of data. AI systems need vast amounts of data. How can these systems leverage user data in ways that don’t reduce or remove privacy? This is something Microsoft is thinking about a lot.

In the case of its Azure Speech Services, which the company showed off at Build, the team behind it created a model that can then learn from an organization’s data stored in Microsoft 365. With this approach, Microsoft engineers are hands-off on the data; they can’t see customer data or interact with it. Only the speech services model deployed to that particular company can interact with the data.

There are other problems with privacy as well, such as consent.

People recently discovered two Canadian malls used cameras and facial recognition software embedded in directory kiosks without telling users. The malls’ parent company claimed the software didn’t store images — and therefore consent wasn’t required. Further, the company said the software could only tell the gender and approximate age of a person and was used to gather aggregate data about what, say, 60-year-old men typically searched for at the directory.

https://twitter.com/mackenzief/status/1118509708673998848

Another example is the case of MacKenzie Fegan, who tweeted about her experience boarding an airplane. Instead of handing over a passport or boarding pass, an automated system scanned her face, and she was allowed onto the plane.

To make matters worse, the airline compared the scan against data collected by the U.S. government and loaded into a Customs and Border Protection database.

We should clarify, these photos aren't provided to us, but are securely transmitted to the Customs and Border Protection database. JetBlue does not have direct access to the photos and doesn’t store them.

— JetBlue (@JetBlue) April 17, 2019

The issue with both these events is that users never provided consent, and worse, opting out was hardly an option. In the case of the mall kiosk, users could opt out of the facial scan by not using the directory — hardly a fair way to approach consent — and in Fegan’s case, the government shared her data before she even knew there was a program she might want to opt out of.

If you haven’t seen those tweets before, I highly encourage you read through the whole thread.

Transparency and accountability: AI should be understood

These final principles around sustainable AI are incredibly important, especially in automated systems that interact directly with or have an impact on humans. O’Brien said that we should be able to explain AI systems and that they should have algorithmic accountability.

To the first point, imagine an AI system used in hospitals determined your ailment didn’t require in-patient treatment. Wouldn’t you want to know why?

O’Brien discussed an instance just like that, where an automated system was created to assess the severity of pneumonia and determine an optimal treatment plan — whether in- or out-patient treatment, to escalate them to the front of the queue and more. The goal was to speed up waiting rooms by automatically prioritizing those who needed care while reducing the number of non-critical patients.

However, after training the system on data, it determined that people with asthma who got pneumonia were ‘low-risk.’ Any doctor will tell you that is false. What happened was that asthmatics tend to be more sensitive with their chest and lungs, and are more adept at detecting if something is wrong and seeking treatment accordingly. Further, doctors would likely prioritize a person with asthma who gets pneumonia. As such, the data didn’t reflect people with asthma accurately, and the AI developed a critical error.

Thankfully, doctors opted to go with a different, easier to understand version of the AI so that they can see how it comes to conclusions and judge the accuracy of those conclusions accordingly.

If you can’t understand how AI reaches a decision, how can you hold it accountable? And who is responsible when things go wrong?

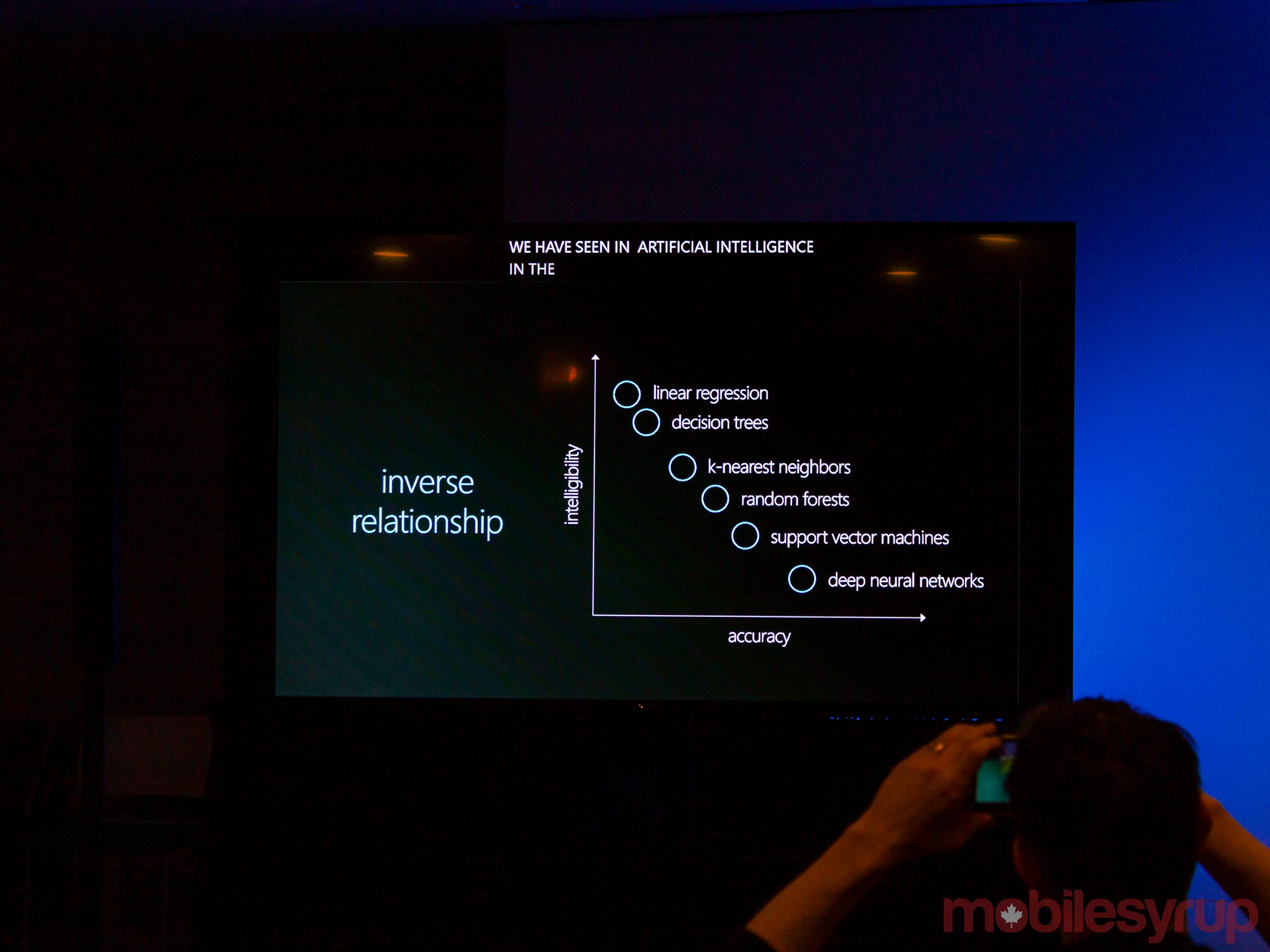

There are several different types of machine learning and AI models, from linear regression to random forests and deep neural networks. According to O’Brien, there is an inverse relationship between the understandability of an AI system and its accuracy. For example, linear regression is easier to understand and explain, but deep neural networks are far more accurate.

Transparency becomes incredibly important when it comes to accountability. If a person can’t understand or explain why or how an AI comes to a specific result, how can that system be accountable when things go wrong? More importantly, who can be held responsible?

This issue is already before the courts as a business tycoon from Hong Kong launched a lawsuit against the salesman who convinced him to invest in an AI-operated hedge fund. The AI’s poor trades allegedly cost the businessman over $20 million USD ($26.9 million CAD).

Microsoft isn’t perfect, but it wants to be better

Despite the extensive list of principles outlined in his talk, O’Brien maintained that Microsoft isn’t perfect. The company is still working through these ideas, thinking about them and figuring out its path.

However, O’Brien also said Microsoft wants to work with governments to regulatory frameworks for AI. In the U.S., the company is doing a lot of work at the state level when it comes to regulation and legislation, as the states are more agile than the federal government.

There’s also the matter of who purchases the tools. O’Brien said Microsoft makes decisions about who to sell to — government, military or otherwise — based on what the customer wants to use the tech for. Further, the company avoids selling AI tech for scenarios that go against its principles, and it lets employees opt out if they feel uncomfortable working on a project for a specific customer.

Microsoft is also investing in cross-cultural research and ways it can respect local and cultural attributes without imposing western values through AI systems it creates.

Finally, the company has numerous mechanisms in place to ensure customers don’t misuse AI tools it creates for them.

All of these are part of ongoing research as well. Microsoft continuously looks for ways to improve AI, solidify principles and create responsibly. It’s an open, honest and impressive stance.

Artificial intelligence is the future, and Microsoft wants to make sure it’s a responsible one.

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.