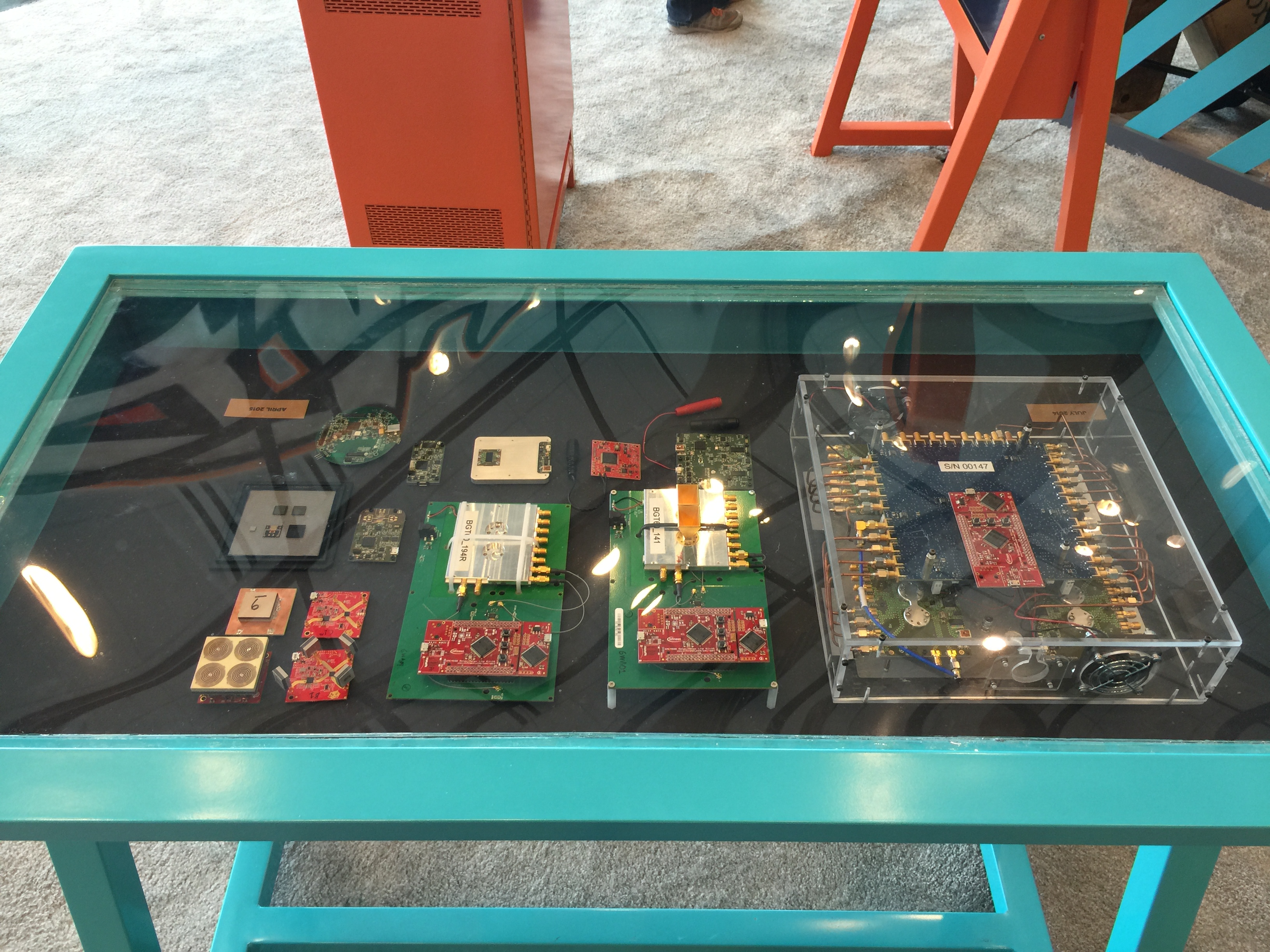

Google’s ATAP division is no stranger to moonshots. The company behind modular smartphones (Ara) and three-dimensional cameras embedded in a tablet (Tango) has created a solution (or the beginnings of one) for hand-based gesture input using radar.

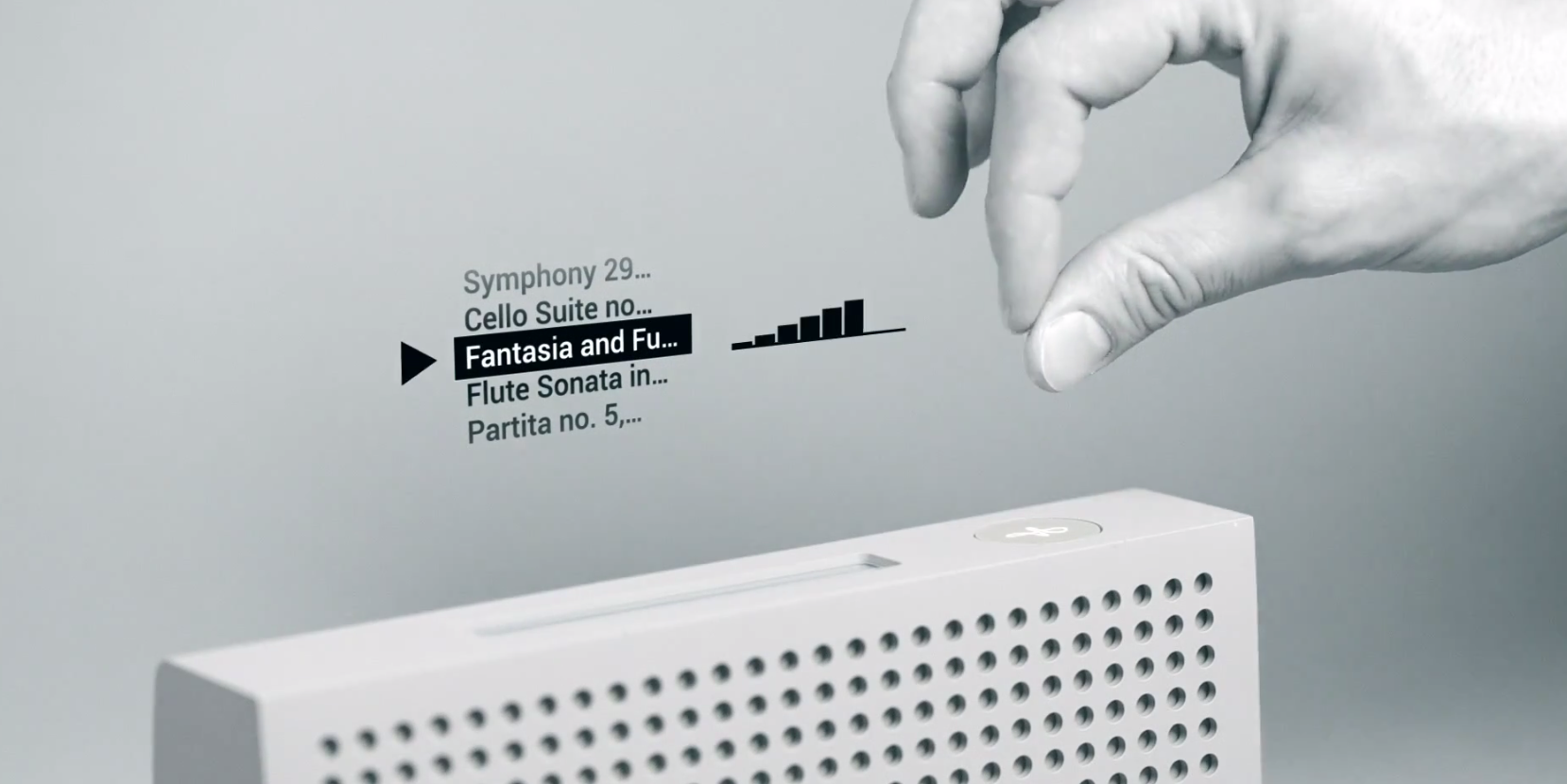

Operating at 60Ghz in the RF space, Project Soli is a way for hands to perform very precise actions – tapping, squeezing, rubbing – in open space and have it relayed into digital form, either through a large screen or mobile device.

Soli isn’t nearly finished, and there’s a lot of work necessary to get it into shipping products, but the high bandwidth nature of our digits makes it easy to believe that the future of input isn’t just on screens, but near screens.

[source]Soli[/source]

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.