Google’s Pixel line has always offered solid photography to users, including a frankly stunning Portrait Mode capability that far outshone most other smartphones.

While a lot has changed since Portrait Mode debuted on the Pixel 2 line — namely, competitors have caught up in a lot of ways — the Pixel 4 continues to improve on the formula. For those who don’t know (or don’t remember), the Pixel 2, 3 and 3a lines only had one rear camera. Unlike other manufacturers, which used secondary cameras to help detect depth when taking Portrait Mode, the Pixel line used dual-pixels.

The dual-pixel system essentially splits each pixel in half, allowing the software to read each half of the pixel separately and get a marginally different image. In particular, the background will shift compared to the subject of the image and the magnitude of the shift relates to the distance between subject and background (known as parallax).

An easy way to think of it is similar to how your eyes work. Because you receive visual information from two separate points on your face, your brain can calculate depth using the distance between your eyes and the effect that has on what you’re seeing.

You can demonstrate it yourself by lifting your arm straight out in front of you and sticking up your thumb. Close one eye, then switch and close the other eye. The background behind your thumb will appear to move while your thumb remains in the same spot.

Dual-camera setups can improve depth estimation

While the Pixel line used dual-pixel technology along with a healthy dose of Google’s machine learning to achieve excellent Portrait Mode results, dual-pixels aren’t a perfect solution. For example, it can be more difficult to estimate the depth of a far away scene because the pixel halves are less than 1mm apart. In a blog post from the Google AI team, the company explained how pairing dual-pixel technology with a dual-camera setup can help estimate depth.

With the Pixel 4, which has a wide-angle primary camera and a telephoto secondary camera, the cameras are 13mm apart, compared to the less than 1mm difference between the dual-pixels. This larger distance creates a more dramatic parallax that can improve depth estimations with far away objects.

However, the addition of an extra camera doesn’t make dual-pixel technology useless. For one, the Pixel 4’s telephoto lens has a minimum focus distance of 20cm. This means that the Pixel 4 can’t use the second camera for depth detection for close subjects, as the telephoto lens won’t be able to focus. Further, estimating distance can be difficult when lines travel in the same direction as the baseline difference.

![]()

In other words, it’s difficult to estimate the depth of a vertical line when the pixels or cameras are split vertically. In the case of the Pixel 4, however, the dual-pixels’ split and the cameras’ split are perpendicular, allowing it to estimate depth for any line of orientation.

Finally, Google said it trained the machine learning model used for depth detection to work with either dual-pixels or dual-cameras. This technique means depth detection will still work if one of the inputs isn’t available — such as when taking a close up picture that prevents the telephoto camera from focusing. It also means that the depth detection can combine inputs for an overall better image.

More SLR-like bokeh

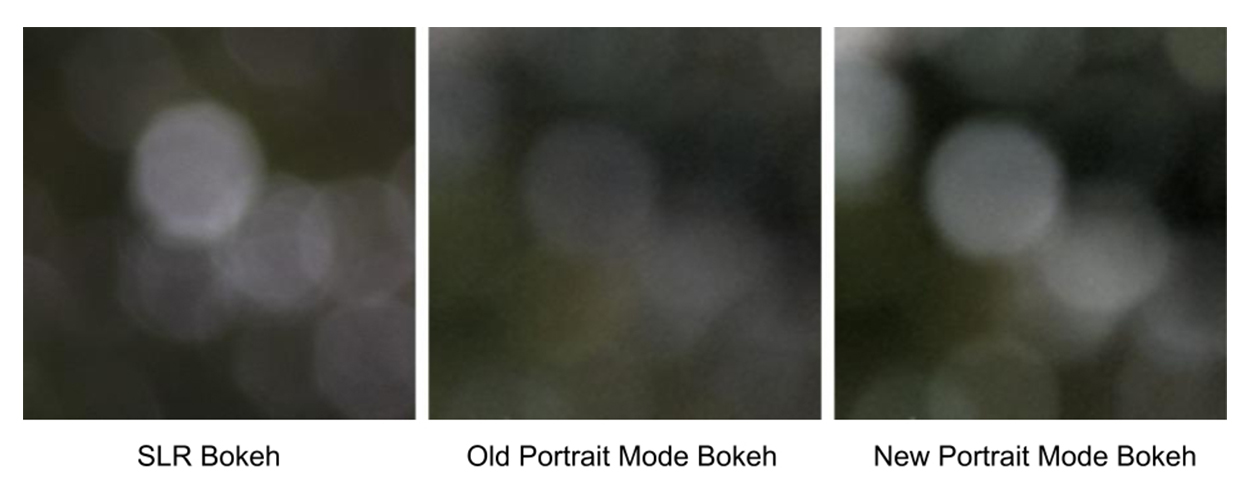

Another improvement that Google made to Portrait Mode is improving the bokeh effect so it better mimics an SLR camera. For example, high-quality SLR bokeh turns small background highlights into bright disks. However, SLRs maintain the brightness of these spots, whereas portrait mode on phones tends to minimize them.

Google adjusted how it processes images so that it blurs the raw HDR+ image first before applying tone mapping, which allows it to maintain the brightness of these points. Further, processing images in this way helps maintain saturation so the background matches the saturation of the foreground. This isn’t just for the Pixel 4, however — these changes also came to the Pixel 3 and 3a with Google Camera app version 7.2.

Overall, these new changes should lead to better Portrait Mode shots. If you’re interested in the nitty-gritty of how it all works, check out the Google blog post for all the details.

Source: Google Via: 9to5Google

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.