Facebook has released its first Community Standards Enforcement Report to provide information on enforcement efforts for posts containing graphic violence, nudity and terrorist propaganda, among other things.

The report is the company’s response to public doubt regarding how effective the social media giant is at preventing, identifying and removing violations from its platform.

Most of the metrics that Facebook shared are still in their preliminary stages, but it is interesting to see some real results about how the company is fighting abuse on its website. Facebook says it is still refining its metrics to get the most accurate data possible.

The majority of the report focuses on figuring out how prevalent ‘Community Standard’ violations are on Facebook.

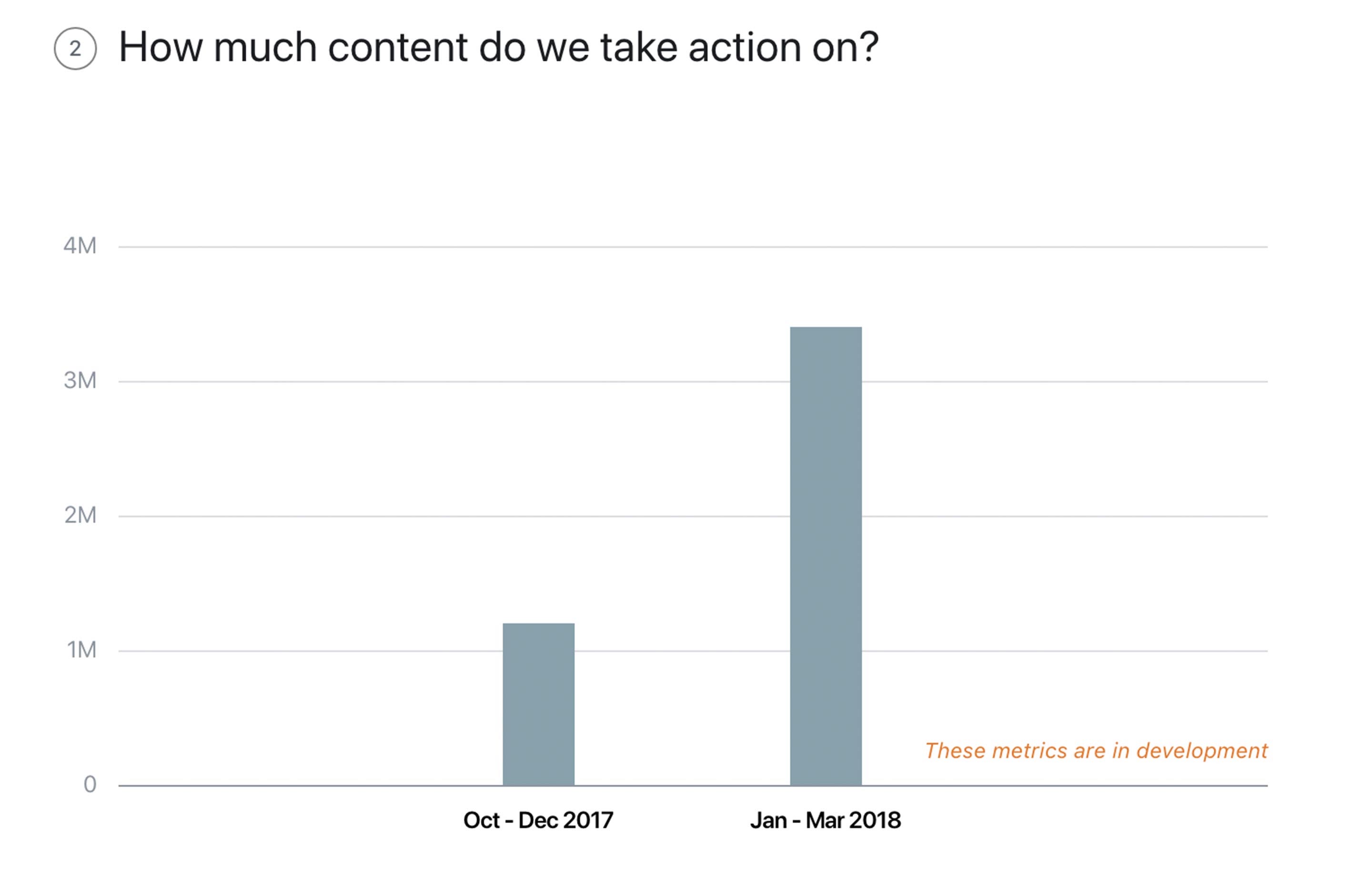

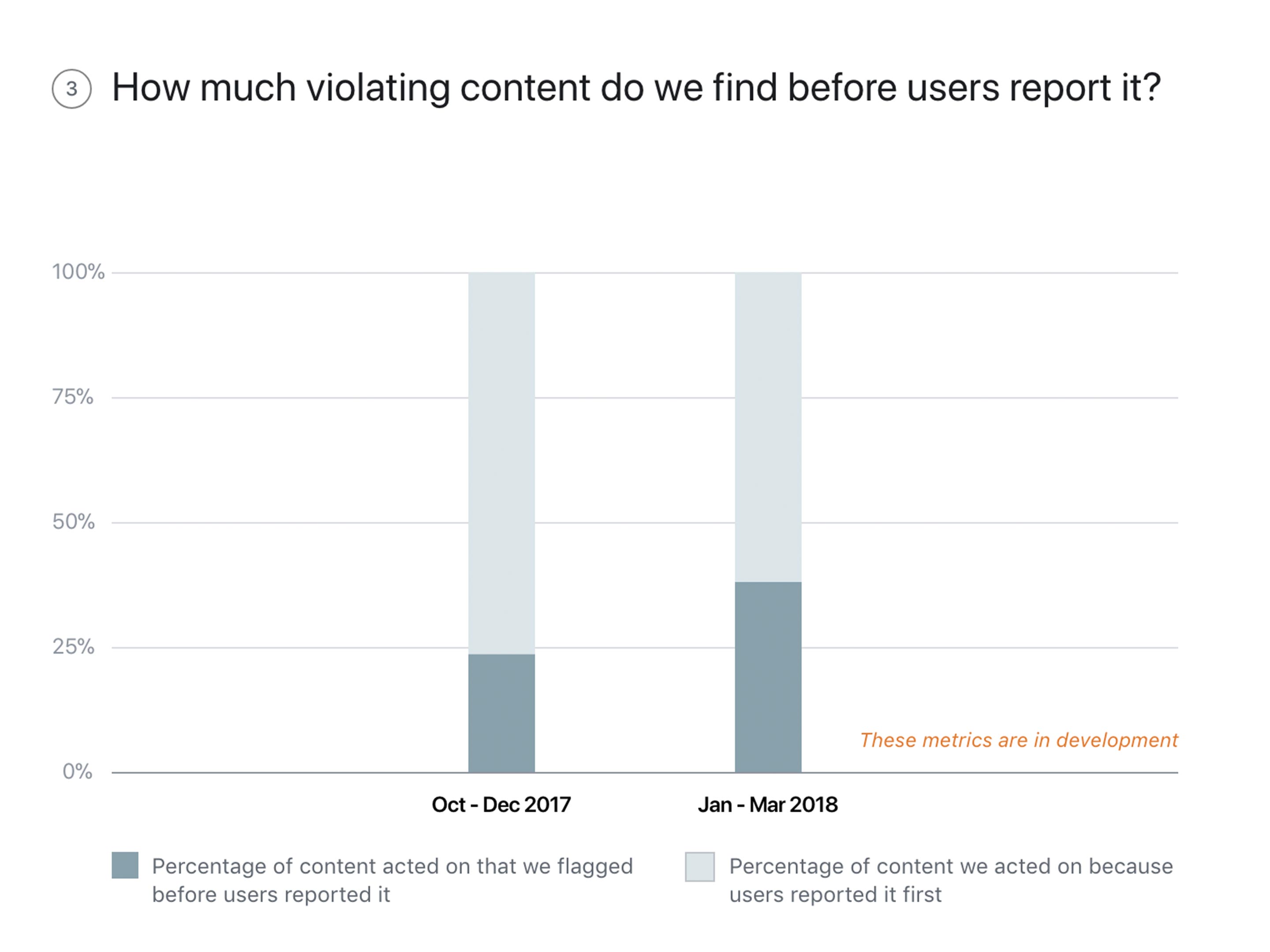

To measure these violations, the company is looking at how much content it takes action on, how much standards-violating content is found before it gets flagged and how quickly the company takes action.

Facebook has been notoriously bad at flagging and taking down hate speech in the past, according to a ProPublica report. In the report Justin Osofsky, one of Facebook’s vice presidents stated that the company is going to double the size of its safety and security team in 2018, So it will be interesting if the hate speech portion of the report does get better throughout the year.

Facebook claims that an average of 25 out of 10,000 posts on the platform contain graphic violence and must be removed. The company says it has taken action on 3.4 million posts from January to March 2018. Around 86 percent of the banned graphic violence content on the platform was found by the company before it was flagged by a user.

The social network estimates that around 8 out of 10,000 viewed posts on Facebook contain sexual activity or nudity, which is a slight increase from last quarter, when the company thought there were only 6 to 8 viewed posts per 10,000 with such content.

The company took action on 21 million pieces of content, 96 percent of which was identified before it received any views at all.

Facebook also reports it took action on 1.9 million pieces of content related to terrorist propaganda, 99.5 percent of which were taken before the content was viewed by users.

In terms of hate speech, the company took action on around 25 million posts and content on the social network but most of it was flagged by users. Only 38 percent of content the contains hate speech was found by Facebook before it received any views.

Facebook further states it removed or covered 837 million spam posts and just under 100 percent of these posts were detected by the company before it was distributed on the platform.

The company estimates that 3 to 4 percent of active monthly users are actually fakes. In its last quarter, the company deactivated 583 million fake accounts, which is down from 694 million in the previous quarter.

About 98 percent of these accounts were taken down before they were able to interact with real users. This number only accounts for fake accounts that were actually created. Accounts that were blocked during creation don’t factor in here.

Source: Facebook